What is it?

Chain of thought prompting basically means you are asking ChatGPT (or another LLM) to explain its reasoning step by step when answering a question.

It is a powerful and important technique to understand.

It is also another oddly named term, like zero shot and few shot prompting. The concept is easy, so don’t let the strange name fool you.

What is an example of Chain of Thought prompting?

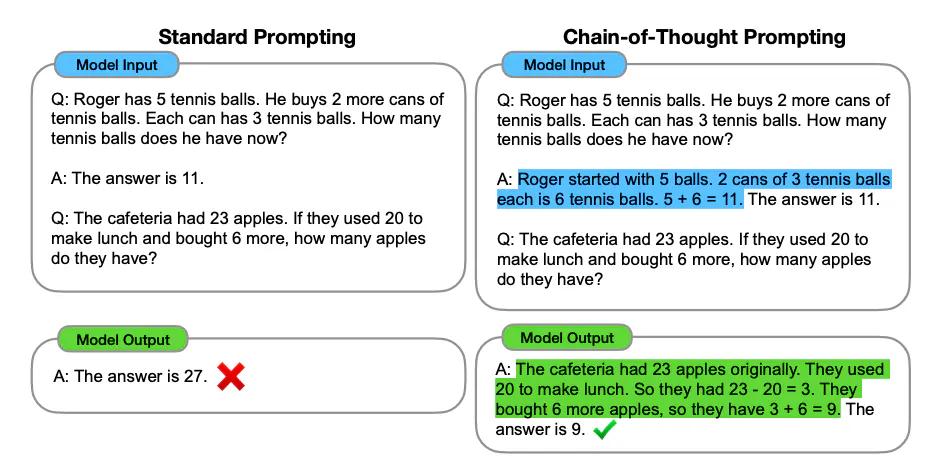

I’ll start with the example from Wei et al. (2022) . This is the example that everyone seems to use. (I’ll show my own example below)

In this first example, if you simply ask ChatGPT for an answer to a pretty basic logic problem, it will give you an answer. The problem is the answer may be wrong, and you won’t see the “thought process” or the reasoning it used.

If you explicitly ask ChatGPT to explain the solution step by step, it will not only explain the reasoning, but it will be much more likely to get the answer correct.

These LLM’s are able to use logic and reasoning to some extent. If you ask it to explain the steps, it is able to see if there are any flaws or contradictions with its own reasoning while it is explaining it.

If you simply ask for the answer, it may skip some reasoning steps and just answer you with the first thought that “comes to mind”.

Interestingly, this is similar to how humans are. If you ask someone to explain their reasoning while answering a problem, they are more likely to get the correct answer. This is a major reason why teachers ask their math students to “show their work”.

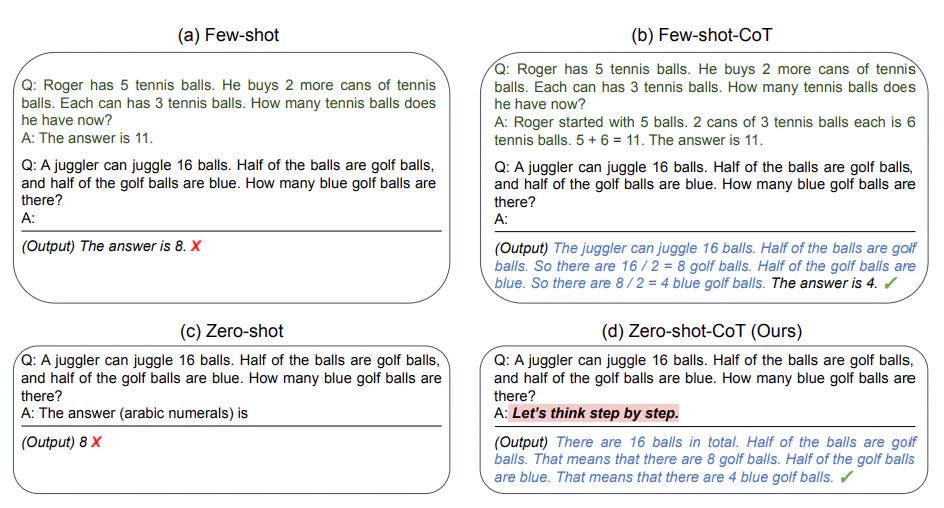

This next example shows 4 types of prompting and compares the answers:

- Few-shot prompting

- Few-shot chain of thought prompting

- Zero-shot prompting

- Zero-shot chain of thought prompting

Read our article on zero-shot vs few-shot prompting to remind yourself of this concept.

You can see that both “few-shot chain of thought” and “zero-shot chain of thought” prompting strategies are able to arrive at the correct answer. The other 2 prompting strategies arrived at the wrong answer.

The lesson here is that you need to either show ChatGPT an example of how to think, and then ask it to reason in the same way, or you can just ask it to “think step by step”.

If you want to keep learning, join our free AI Newsletter for tips and tricks on all the newest AI and prompt engineering trends.

My own unique example of chain of thought prompting:

I didn’t want to be like the other articles and just stop with the 2 examples that came from the papers. I wanted to test this myself with my own example to see how this worked.

I looked up an example of an 8th grade math word problem online:

I prompted this question into ChatGPT and asked for a one number answer.

Annie had 21 stickers. She bought 6 stickers from a store in the mall and got 18 stickers for her birthday. Then Annie gave 2 of the stickers to her sister and used 13 to decorate a greeting card. How many stickers does Annie have left?

It answered 52 (which is wrong).

I then prompted

Answer this question again, but this time explain your reasoning at each step

It answered: Let’s break down the problem step by step:

- Starting with stickers Annie had: Annie initially had 21 stickers.

- Stickers bought from a store: She then bought 6 more stickers. Adding these to her initial count, 21 + 6 = 27 stickers.

- Stickers received for her birthday: Annie received 18 stickers for her birthday. Adding these to her current total, 27 + 18 = 45 stickers.

- Stickers given to her sister: Annie gave 2 stickers to her sister. Subtracting these from her total, 45 – 2 = 43 stickers.

- Stickers used to decorate a greeting card: Annie used 13 stickers to decorate a greeting card. Subtracting these from her total, 43 – 13 = 30 stickers.

So, after all these transactions, Annie has 30 stickers left.

This is the correct answer and the correct reasoning!

Are you enjoying these lessons? Sign up for our free AI course lessons and discounts to our other Udemy courses.

Why does chain of thought prompting work?

We discussed this in the section above, but for a second opinion, I decided to ask ChatGPT directly. I continued the chat about the math question I asked it above.

I asked ChatGPT: why did you get it wrong the first time and right the second time? When I asked you to explain step by step, why did that make you get it correct?

ChatGPT answered: When asked for just a number initially, it’s possible to make a mistake due to a lack of careful consideration of each step in the problem. Calculating in a rush or without explicitly breaking down the problem can lead to oversight or simple arithmetic errors. This is especially true for mental calculations, where the risk of skipping a step or miscalculating a number is higher.

In contrast, when asked to explain the reasoning step by step, it necessitates a more methodical approach. Breaking down the problem into its individual components forces a more careful consideration of each step and how it contributes to the final answer. This process helps to catch any mistakes made during the initial mental calculation. Additionally, articulating the reasoning helps to clarify the process, making it easier to identify any errors and correct them before arriving at the final answer.

In essence, the act of explaining the steps out loud or writing them down serves as a form of error checking, ensuring a more accurate answer. This approach is beneficial not just for mathematical problems but for problem-solving in general, as it encourages thoroughness and attention to detail.

It is often interesting to ask ChatGPT why it does what it does. It doesn’t mean that it is 100% correct, but the answer can be insightful, and it is worth thinking about.